The 1-5-50 model to validate AI features

Goldcast CPO Lauren Creedon shares her three-step framework that turns AI feature launches from risky bets into trust-building exercises.

Table of contents

Most AI features fail not because the technology doesn't work—they fail because product teams skip the messy middle of validation and rush straight to launch.

During the 2025 AI Product Leaders Summit, Lauren Creedon, Chief Product Officer at Goldcast, shared a framework that's helping her team navigate one of the trickiest challenges in modern product development: introducing AI into a mature product without derailing everything that already works.

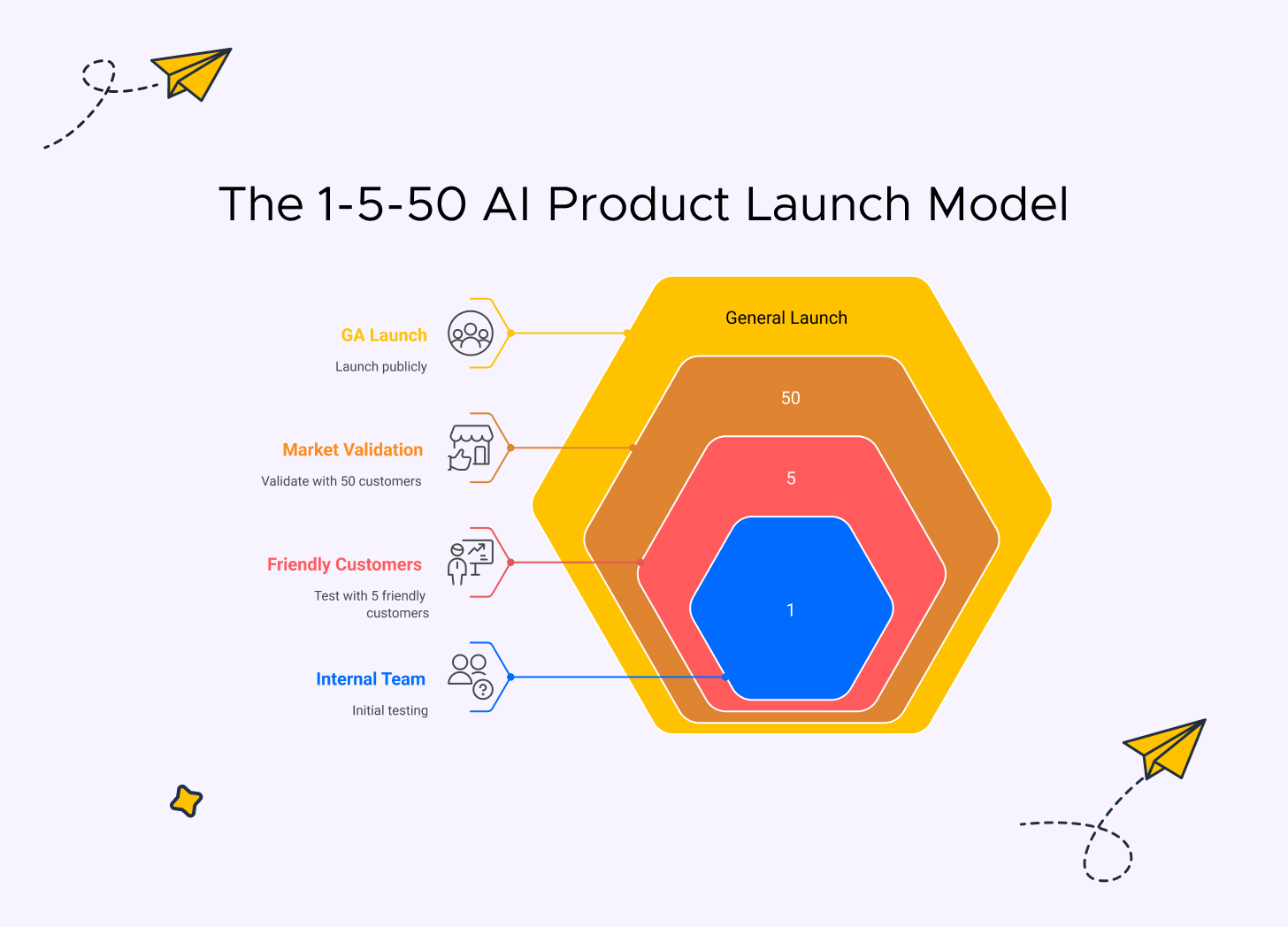

Her "1-5-50 model" isn't flashy. It won't make for great demo day announcements. But it's exactly the kind of disciplined, customer-centric approach that separates AI features people actually use from the ones that quietly get deprecated six months after launch.

If you're a product leader wrestling with how to add AI capabilities to an established product—one with paying customers, established workflows, and revenue you can't afford to jeopardize—this framework offers a practical path forward.

The AI Integration Problem Nobody Talks About

Here's what makes AI different from most feature launches: it introduces what Lauren calls "novelty risk."

Your customers don't inherently trust AI yet. They've heard the hype, seen the failures, and worried about job security. When you add AI to your product, you're not just shipping new functionality—you're asking users to fundamentally change how they work and who (or what) they trust to do it.

As Laur en explained during our summit: "Ultimately, trust is the product. The way we design AI workflows matters. By giving users control and ensuring that humans remain in the loop, we reduce novelty risk and increase adoption."

This is why the traditional "build it, ship it, iterate" approach that works for most features can backfire spectacularly with AI. You can't afford to learn your AI feature confuses users or breaks critical workflows after you've rolled it out to your entire customer base.

The 1-5-50 model solves this by treating AI integration as an incremental trust-building exercise rather than a traditional feature launch.

The 150 Model: A Strategy for Effective Product Testing 🚀

How the 1-5-50 Model Works

The framework is elegantly simple: test with 1 (your internal team), then 5 (friendly customers), then 50 (market validation)—and only then consider a full launch.

Let's break down each stage and why it matters.

Stage 1: Ship Working Code Internally First

The first stage isn't about prototypes or design mockups. It's about actual working code deployed to your internal team.

As Lauren emphasized: "The '1' stage is about shipping working code internally first. This allows your product teams to play with it early, test usability, and spot blockers before the solution reaches users."

Lauren Creedon - Why the 1-5-50 model works

This is where you fail fast in a safe space. Your team becomes the crash test dummy, discovering edge cases, confusing UX patterns, and unexpected AI behaviors—all before any customer sees it.

Why this matters for mature products: When Goldcast explored AI capabilities for video content workflows, they started with their own marketing team using the features for real work. Not sanitized demos or contrived use cases—actual content creation for actual campaigns.

They discovered usability issues that would have been embarrassing (and expensive) to fix after a customer launch. More importantly, they learned which AI capabilities genuinely created value versus which ones were just technically impressive but practically useless.

The psychology here is crucial: internal testing gives your team permission to be honest about what doesn't work. Nobody's trying to justify a sunk cost or defend a decision to stakeholders. It's pure learning.

What to watch for in this stage:

- Does the AI actually solve the problem you think it does?

- Can your team articulate the value without resorting to "it's AI-powered"?

- What's the cognitive load of understanding what the AI is doing?

- Where does the AI do something surprising (good or bad)?

- What questions does your team have that you can't answer yet?

The goal isn't perfection—it's to identify the obvious deal-breakers before you waste anyone else's time.

Stage 2: Test Usability with 5 Friendly Customers

Once internal testing builds confidence, it's time to expand to five carefully chosen customers who are open to experimentation and will give you brutally honest feedback.

Lauren explained: "The '5' phase is all about testing usability. You work with five friendly customers to validate your assumptions and see if your solution resonates with real-world use cases. The feedback you gain here can make or break the next steps."

These early adopters act as your reality check. They'll tell you if your AI solution actually fits their workflow or if it's a solution looking for a problem.

Why five specifically? It's enough to see patterns in feedback but small enough to manage personally. You can do customer calls, watch screen recordings, and truly understand their experience. At 50 customers, that level of intimacy becomes impossible.

The critical question this stage answers: Does your AI feature integrate into real customer workflows, or does it require them to completely change how they work?

Lauren shared a powerful insight here: these friendly customers aren't just testing your feature—they're testing your assumptions about the problem you're solving. This is where many AI features die quietly, and that's exactly as it should be.

If your "friendly five" don't love it, your broader customer base certainly won't.

What makes a good "friendly five" customer:

- They already trust you and will be honest about what doesn't work

- They represent a meaningful segment of your customer base

- They have a genuine problem your AI feature is trying to solve

- They're willing to give detailed feedback, not just thumbs up/down

- They understand this is experimental and won't punish you for imperfection

This phase is also where you start to understand the onboarding challenge. If your friendly customers are confused about when to use the AI feature or what it actually does, that's a signal you need to fix before expanding further.

Stage 3: Scale to 50 for Market Validation

After refining your AI feature based on feedback from your initial five customers, scaling to 50 provides the data you need to make a go/no-go decision on a full launch.

Lauren described this stage: "The '50' phase is where we roll out solutions to fifty customers to assess scalability, adoption barriers, and overall market demand. It's critical here to invest time in measuring workflows and ROI alignment."

At 50 customers, you're no longer testing if the feature works—you're testing if it works at scale.

What you're actually measuring at this stage:

- Adoption rate: What percentage of the 50 actually use the feature?

- Time-to-value: How long does it take new users to get value from the AI?

- Support burden: What questions are customers asking? What's breaking?

- Workflow integration: Are customers incorporating this into their regular workflow, or is it a novelty they try once?

- ROI alignment: Can customers articulate the value they're getting? Would they pay more for this feature?

This is your go/no-go decision point. If adoption at 50 customers is strong, your onboarding is smooth, and customers can clearly articulate value—you're ready to consider a broader launch.

If not, you've just saved yourself from an expensive mistake.

Why the 1-5-50 Model Works for Mature Products

The genius of this framework is that it protects the three things you can't afford to jeopardize when adding AI to an established product:

1. Existing workflows customers depend on By testing incrementally, you ensure your AI feature enhances rather than disrupts how customers already work. If it doesn't fit naturally into existing workflows by the time you hit 50 customers, you know you have a problem.

2. Established trust you can't afford to break Lauren's emphasis on "trust is the product" is critical here. Each stage of the 1-5-50 model builds trust incrementally. Your internal team trusts it, then your friendly five, then your 50—and only then do you ask your entire customer base to trust it.

3. Revenue you can't risk on unproven features Mature products have paying customers. Those customers are paying for value they're already receiving. The 1-5-50 model ensures you're adding value rather than creating confusion, frustration, or reasons to churn.

The Discipline Problem: Why Most Teams Skip This

Here's the uncomfortable truth: most product teams won't follow the 1-5-50 model because it requires patience in an industry that rewards speed.

There's immense pressure to ship AI features fast. Competitors are announcing "AI-powered" capabilities. Executives want to talk about AI in board meetings. Engineers are excited to work on cutting-edge technology.

The 1-5-50 model forces you to slow down and validate at each stage. That's psychologically difficult when everyone around you is racing to ship.

But as Lauren warned: "AI is only a multiplier if it's addressing a high-value point of friction. Without disciplined product strategy, it becomes more of a distraction than anything else."

Translation: shipping AI features fast doesn't matter if nobody uses them.

The companies getting this right aren't the ones with the most AI features. They're the ones whose AI features actually solve real problems and achieve meaningful adoption.

What Goldcast Learned: Video Workflows as a Case Study

When Goldcast explored AI for video content workflows, they didn't chase every shiny capability. They started with basic AI features like auto-captions and transcription—table stakes functionality that solved clear pain points for B2B marketing teams.

But even with these straightforward use cases, the 1-5-50 model proved essential. They discovered through internal testing that their first implementation of auto-captions had accuracy issues with technical jargon. Their five customers revealed that the AI sometimes hallucinated words that sounded similar but changed the meaning of technical content.

These weren't issues they could have caught through code review or QA testing. They required real usage with real content in real workflows.

By the time they scaled to 50 customers, they had refined the feature to the point where adoption was strong and support burden was minimal. More importantly, they had built customer trust in Goldcast's AI capabilities, making future AI features easier to adopt.

Key Takeaways for Product Leaders

Lauren's 1-5-50 model offers several critical lessons for product leaders navigating AI integration:

1. Start small to learn fast Internal testing with working code—not prototypes—allows your team to discover problems in a safe environment. This isn't about perfection; it's about failing fast before customers see the failures.

2. Validate with friendly customers who will be honest Your "friendly five" should be chosen for their willingness to give brutal feedback, not their likelihood to praise everything. These customers are your reality check on whether you're actually solving a real problem.

3. Scale with caution and clear metrics At 50 customers, you should be measuring adoption rate, time-to-value, support burden, and ROI alignment. This data drives your go/no-go decision for broader launch.

4. Don't chase AI trends—solve customer problems The most successful AI features aren't the most technically impressive ones. They're the ones that address high-value friction points in customer workflows. Discipline in product strategy matters more than cutting-edge AI capabilities.

5. Preserve trust by keeping humans in the loop As Lauren emphasized, trust is the product. Customers need to feel in control of AI features, not at their mercy. Designing AI workflows that keep humans in the loop reduces novelty risk and increases adoption.

The Bigger Picture: AI as Trust-Building Exercise

The 1-5-50 model fundamentally reframes how we think about AI feature launches. It's not about technology deployment—it's about trust-building at scale.

Each stage builds confidence:

- Stage 1: Your team trusts the AI works

- Stage 2: Your friendly customers trust it solves their problem

- Stage 3: The broader market trusts it's worth adopting

Rush any of these stages, and you're asking customers to trust something you haven't fully validated yourself.

As Lauren wisely concluded: "Build early, test multiple alternatives, and fail fast when your customers aren't craving what you're making."

For companies navigating the complex challenge of adding AI to mature products, the 1-5-50 framework provides a practical, disciplined path forward. It won't make for exciting launch announcements, but it will make for AI features that customers actually use, trust, and value.

And in the end, that's what matters.

This article is based on insights from Lauren Creedon's presentation at the AI Product Leaders Summit hosted on November 25, 2025. Lauren is the Chief Product Officer at Goldcast, where she leads product strategy and AI integration for their video content platform.