The AI mistake that's killing product delight

Learn why most AI features fail—and what separates products people admire from products people love. The answer? It's not about what your AI can do. It's about how your AI makes users feel.

Table of contents

During the AI Product Leaders Summit in November 2025, I sat down with Janna Bastow (CEO of ProdPad), Nesrine Changuel (Founder of Product Excellence), Netali Jakubovitz (VP of Product at Maze), and C. Todd Lombardo to talk about building AI experiences that actually connect with users.

What we discovered? Most teams are making the same costly mistake—and it's not what you think.

The technology-first trap

C. Todd dropped a truth bomb that hit hard:

"Companies push the technology a little too much and they sacrifice the humanness of it."

Think about the cautionary tales we've all witnessed. Microsoft's Tay chatbot? It lasted less than 24 hours before becoming a PR nightmare. Google Duplex? Amazing voice technology with frighteningly realistic human speech that nobody could figure out how to actually use in their daily lives.

Janna Bastow put it perfectly:

"It feels like that was technology that was a little bit before its time. If you throw cool tech out there, it'll do things, but sometimes it'll do bad things."

The pattern is clear: When you lead with what AI can do instead of what users need, you create solutions looking for problems. Impressive demos that fall flat in real-world use.

Both speakers agreed that effective AI design requires balancing technological experimentation with understanding user needs and crafting helpful, human-like interactions.

Your AI will hallucinate (and that's not the real problem)

Here's where it gets interesting. Netali Jakubovitz shared something every product leader needs to hear:

"Don't be afraid your AI will hallucinate. It will. So just plan for it. Either don't risk it if these are ethical risks, or really put the guardrails there."

The mistake isn't that AI makes errors—it's that teams pretend it won't.

Her advice is essential for product leaders aiming to mitigate AI pitfalls and build systems that align with users' values and expectations. By proactively designing with ethical concerns in mind, teams can safeguard user trust—even in uncertain scenarios.

Think about it: Would you rather have a product that claims 100% accuracy and fails spectacularly, or one that sets clear expectations and handles mistakes gracefully?

The best product leaders don't try to achieve perfection. They build guardrails that maintain trust even when things go sideways.

The delight paradox nobody's talking about

Nesrine Changuel revealed something that changes how we should think about AI features:

"Most teams stop at the functional need. They build things that work great, and that's really dangerous because the functional layer is the easiest one to compete with and replicate."

Here's the paradox: AI makes it easier to build functional features, which means functional features matter less than ever.

She defined "delight" as more than meeting functional needs:

"Delights happen when a product creates emotional connection with the users. Emotional connections are created when we do not only solve for the functional needs, but we also solve for emotional needs."

Nesrine breaks delight into three core pillars:

- Remove friction - Make things work smoothly

- Anticipate needs - Show up before they ask

- Exceed expectations - Surprise them with something they didn't know they wanted

Your competitors can copy your AI features within months. They can't copy the emotional connection your product creates.

The real competitive advantage? Emotional connection.

The expectation shift that's changing everything

Janna highlighted a challenge that's reshaping product design:

"Expectations are so tricky, right? Because they're constantly changing. What people expected a few years ago is different than what they expect today."

She noted that users now expect chatbots to act like humans, and failure to meet those expectations produces disappointment rather than engagement:

"You're met with a search bar or a chatbot that doesn't do that. You're like, oh, it's an old one."

ChatGPT didn't just raise the bar for chatbots—it raised the bar for every interface. Users now expect products to understand context, remember conversations, and respond naturally.

This creates a fascinating problem: Every year you wait to improve your AI experience, the gap between user expectations and your product widens.

This shift challenges product teams to constantly adapt their offerings to stay aligned with user preferences while leveraging technology breakthroughs.

Research is your secret weapon

Both Netali and Janna emphasized something crucial: you can't guess your way to delight.

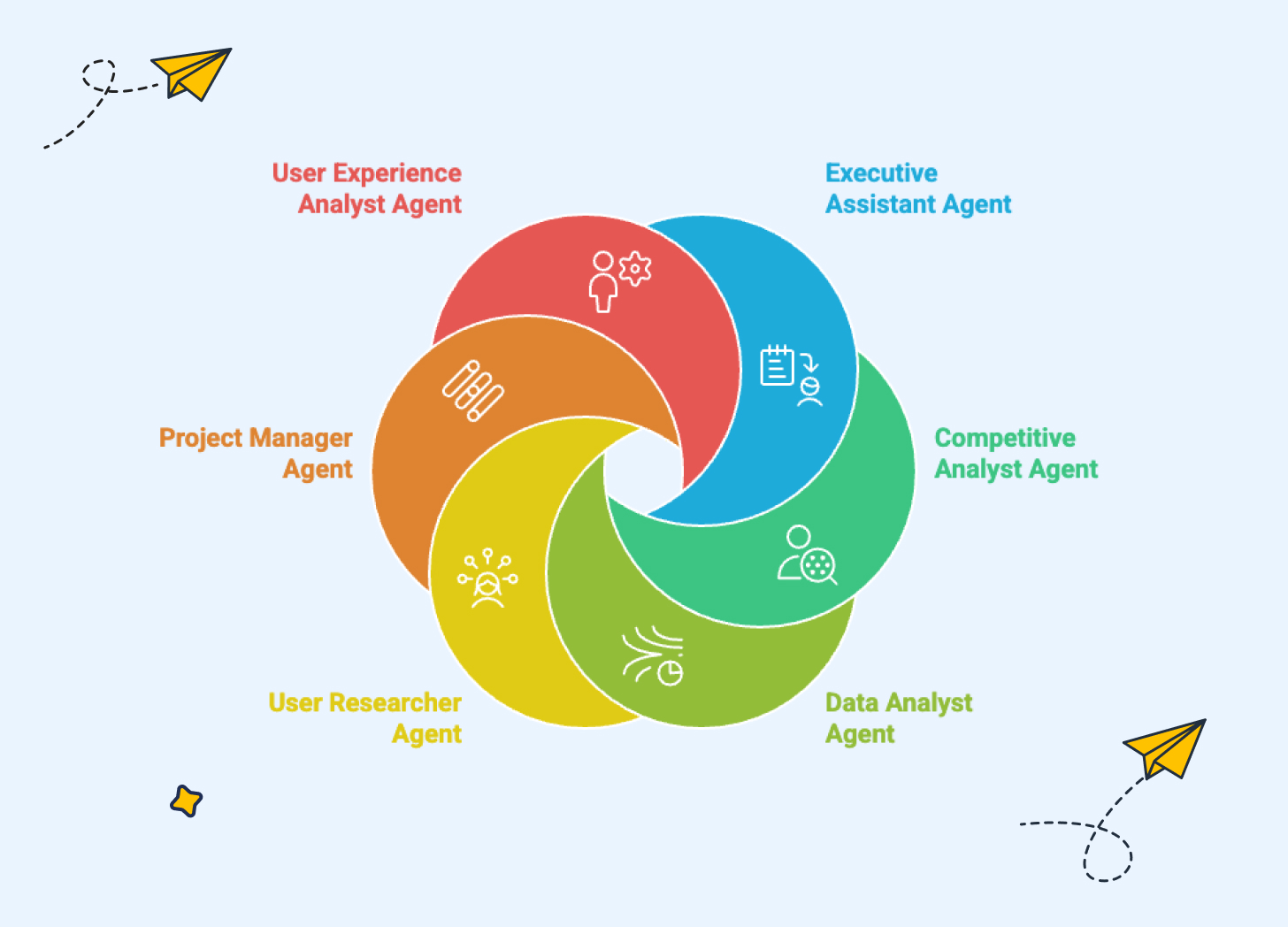

Netali introduced innovative methods like AI-moderated interviews that allow teams to collect qualitative insights at scale—particularly valuable during early exploratory phases:

"An AI agent is basically moderating an interview conversation. You define the research goals and send the agent, and they would collect the insights to bring you answers to those research goals."

But here's what matters more than the method: Janna's concept of "emotional segmentation."

Instead of asking "What features do users want?", ask:

"What is the emotional state that your ideal user starts with, and how do you change that emotional state using your product?"

A frustrated user who becomes confident. An overwhelmed user who feels clarity. A skeptical user who feels trust.

That transformation? That's where delight lives.

Janna added that qualitative feedback is crucial for understanding customer emotions and designing products that transform emotional states—not just complete tasks.

The bottom line

The best AI products aren't the ones with the most advanced technology. They're the ones that make users feel something.

Microsoft, Google, and countless startups have proven that impressive technology without emotional connection creates products people admire but don't love.

Your AI features should make your product feel more human, not less. They should create emotional connection, not just functional capability.

The question isn't "What can our AI do?"

The question is "How does our AI make users feel?"